I recently had the opportunity to visit Dynamicland, an extremely interesting project under way in the middle of Oakland.

Dynamicland describes itself as “a new computational medium, where people work together with real objects in physical space…” but what is it, really? In practice, it is an entire building that has been comprehensively equipped with computer vision cameras and projectors, with a software platform that integrates everything. Almost every flat surface (tables, floors) and wall can detect objects, and animate them. It is intended as an environment in which to build applications, and in fact all of Dynamicland is built and maintained using its own tools.

However, the most important thing about Dynamicland is not the technology, but rather how it enables (and leverages) very natural interactions in a collaborative way. By the end of the evening, visitors were explaining Dynamicland to each other, and trying out various experiments.

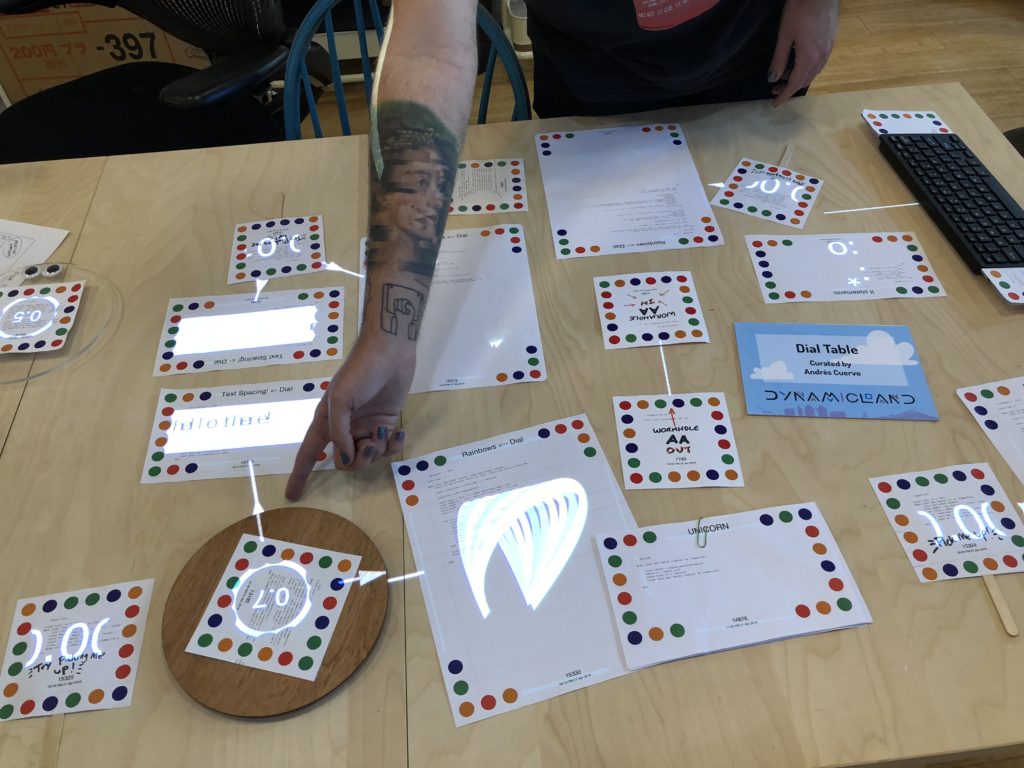

Everything with a colored dot is a Dynamicland object.

There is a lot to unpack here. Over the course of 2 hours I had a chance to play with various demos, talk to the team there, and started to get a sense of the possibilities. I’ll describe what it was like, then share some thoughts on execution, philosophy and the future. Nothing compares to actually visiting and experiencing it for yourself, but I hope to pique your curiosity!

A visit to Dynamicland starts with a brief introduction to what it is, and how it works. Overhead cameras are looking for patterns of colored dots on objects and paper, and then projecting output on to them. Some of the objects have a projected “whisker”, a colored line that sticks out from the edge of the paper. Pointing a keyboard at any object lets you edit the code that runs it.

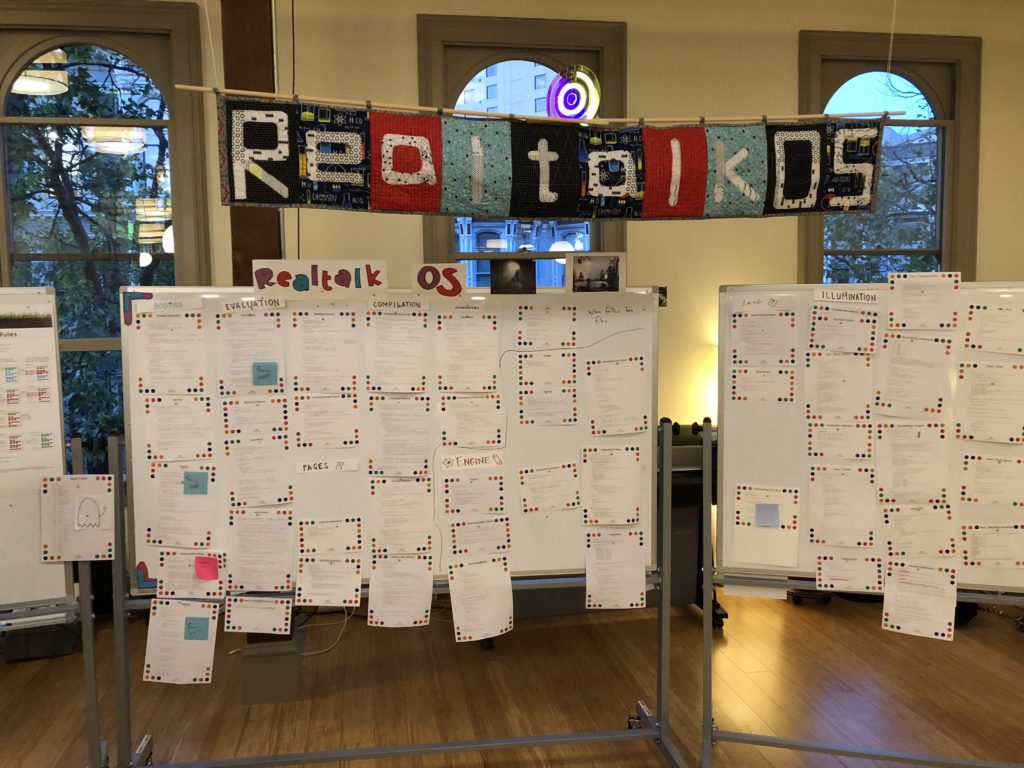

Welcome to Dynamicland!

Demos and applications

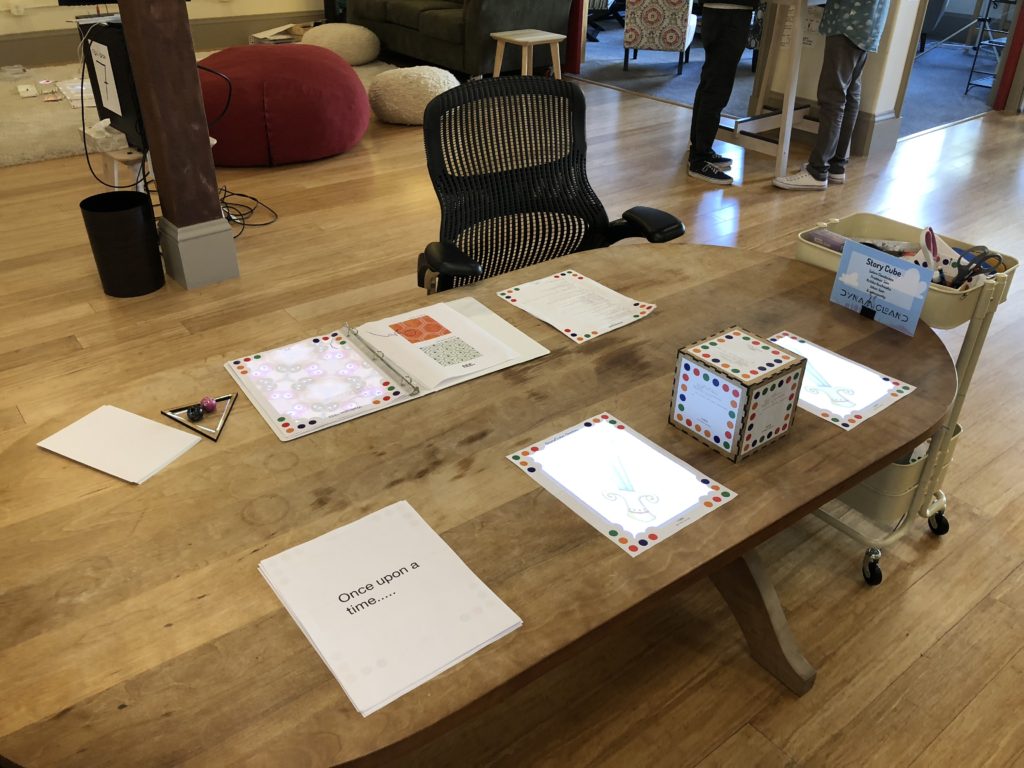

The first demos I played with were dynamic story books. By manipulating a physical cube, or by turning the pages of the book, images and videos were recalled which could be used to create a story.

Story creation tools with a physical cube to manipulate them.

Another fascinating demo was a binder with a sample of wallpapers (really tessellation patterns). Next to the book was a projected frame that reflected the tiling area of whatever sample was being shown. You could place objects into this frame, and see the resulting pattern in the book. In this case, the overhead cameras were being used not just to detect objects, but to capture video from the table.

Later in the evening a friend of mine discovered that she could set it up so that the output of a graphics program could overlap the kaleidoscope frame, easily generating animated patterns.

A really interesting application was a table that showed how a group of middle school teachers had used Dynamicland to organize their findings about teaching methods, and used it to present their results. Their notes were physically laid out on a table, and some of the notes were linked to photos or videos. A neat trick is that you could use a “pointer” (really just a piece of paper with code associated) to select a content item, and send it to a projection on the wall. The “projector” object, the picture frame, etc. are all just Dynamicland objects.

School teachers used Dynamicland to organize and present their findings

Here’s a video that shows how the pointer is used to present information on the wall.

One aspect of Dynamicland that is easy to overlook is that audio is incorporated throughout. Programs created on a table or wall can trigger audio playback on speakers in the vicinity. Here was an application that let you put together a simple player piano, letting you vary instrument sounds, tempo, scale etc. by manipulating pieces that represented these attributes.

Melody, sounds, tempo, etc can be collaboratively combined

This demo allowed you to play with digital signal processing, combining and manipulating signal sources.

My friend found a booklet that, when opened, would cause sounds to be played. She found the page that played a nightingale song, and would announce herself by just opening the book to that page. At some point the Dynamicland staff needed the book, but they realized she liked this function, so they did something really striking: put the book on the table, pointed a keyboard at it, and pressed Ctrl-P (for print). Seconds later, an exact duplicate of that page came out of the printer, and she could walk around and use it to trigger the nightingale sample.

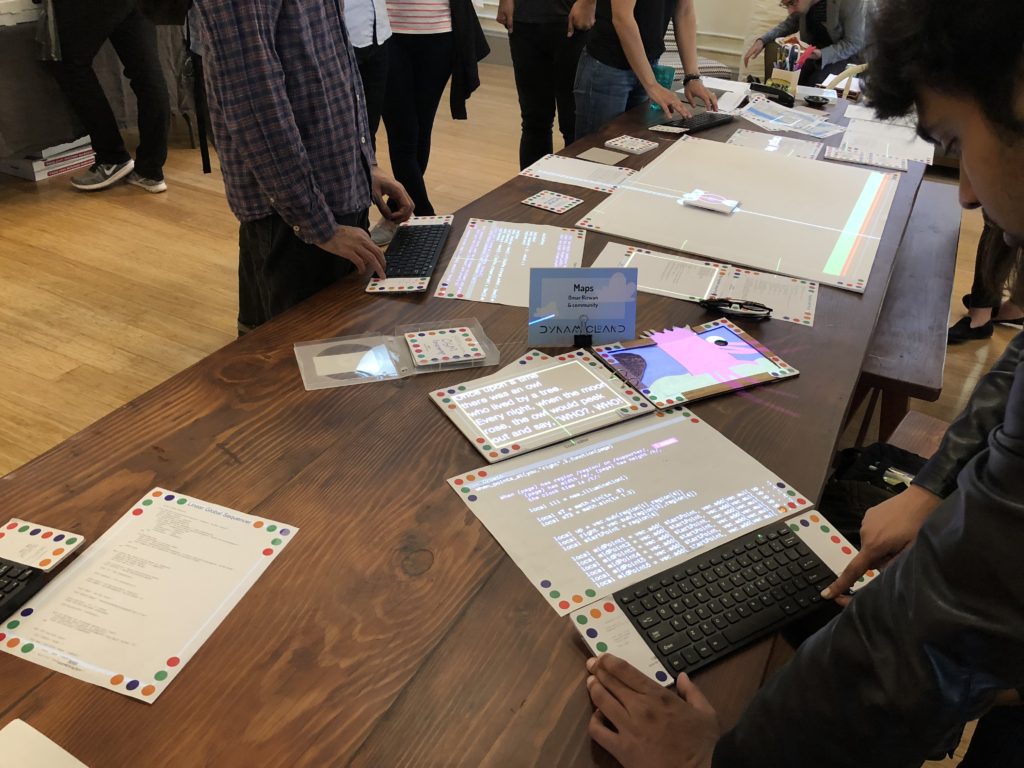

There were endlessly interesting applications. Here is an interactive mapping app. You could attach sheets to a main map to reveal different views.

Add interactive features to a map

An interactive coding demo where you can control the program using a physical dial. The dial element was developed independently, and was integrated with the program with minimal effort. This is a key feature of Dynamicland, that code can be remixed and combined by literally placing paper on a table.

A physical dial is used to control a program

Exploring historical data visualization. Over the course of the evening this app moved from a table to the floor, and then onto a wall, massively improving on a simple line graph to visualize different data points, of course, there will always be use for a quick line graph maker depending on the simplicity of the data being represented. (Most of the walls at Dynamicland are magnetic, so that papers and objects can be easily attached with tiny magnets.)

Exploring data visualization

The director of the center showed me a project created by her daughter, an analog joystick. The cameras can detect a colored dot on the joystick, and establish its displacement with relation to the frame. Then a snippet of code sends out x and y values corresponding to the joystick position. It was incredibly easy to just pick up the joystick and attach it to other programs in the space, for example to pan over a map, or change values in an audio simulation. (Or even play games…)

Analog joystick

How it works

Dynamicland is a combination of physical and software infrastructure. The first is the most obvious and visible, but the second is crucial and probably the most innovative aspect of the project.

The key physical infrastructure of Dynamicland is cameras that can detect marked objects, and projectors that can animate them. This is only the current state of the system, in the future it is intended that different sensors will be able to detect all kinds of objects, and various additional outputs will be possible. Audio output is also possible, and the walls of the building are prepared to allow things to be attached to them.

A bunch of cameras and projectors up in the rafters

It is important to highlight how important this building-wide scale is to the Dynamicland proposition. There are other smart whiteboards & collaboration environments out there, but few (if any) have been built at the building scale. The nuts & bolts of the tech are less important than the scale at which they are deployed. It is (to some extent) this scale that really makes Dynamicland work for collaboration.

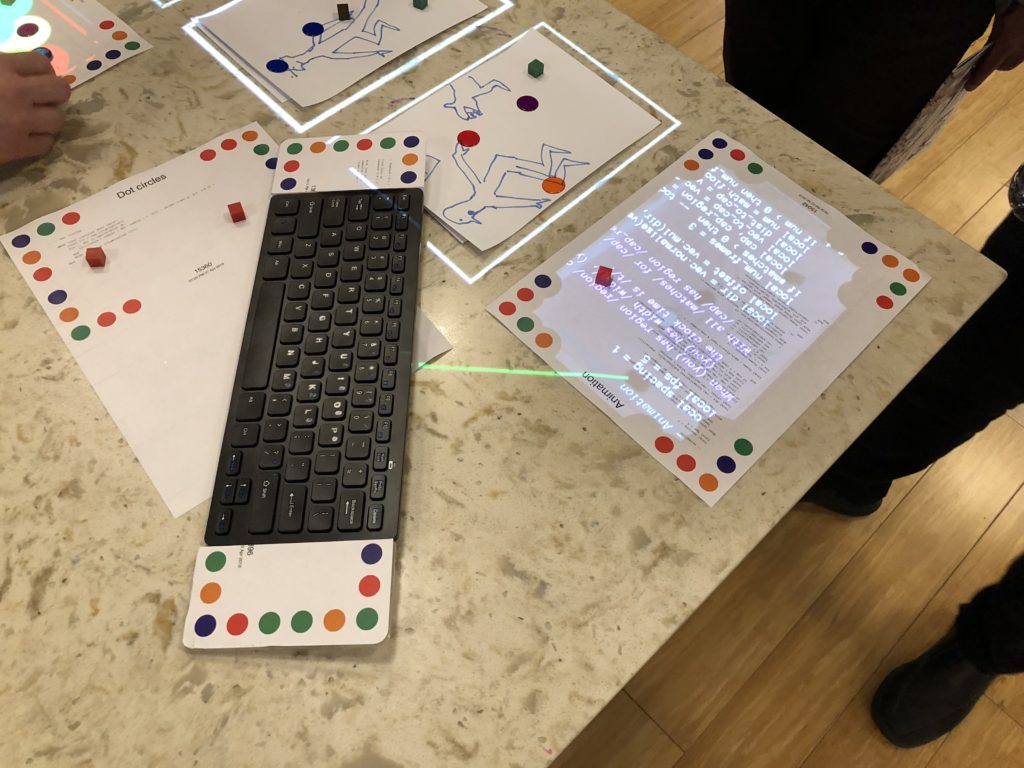

Dynamicland is at heart an environment for developing code collaboratively. Every object in the space has code associated with it, which can be edited by simply pointing a keyboard at it.

Pointing the “whisker” on a keyboard at an object immediately exposes the code

The code that you manipulate is in a language called RealTalk, and it is the basis of the operating system on which all of Dynamicland is built (called RealTalkOS). The entire code repository is accessible using the Dynamicland system. These bulletin boards aren’t just the documentation for the system, they ARE the system. You can pick up these pages and edit them. (Version control is important!)

The code that Dynamicland runs on.

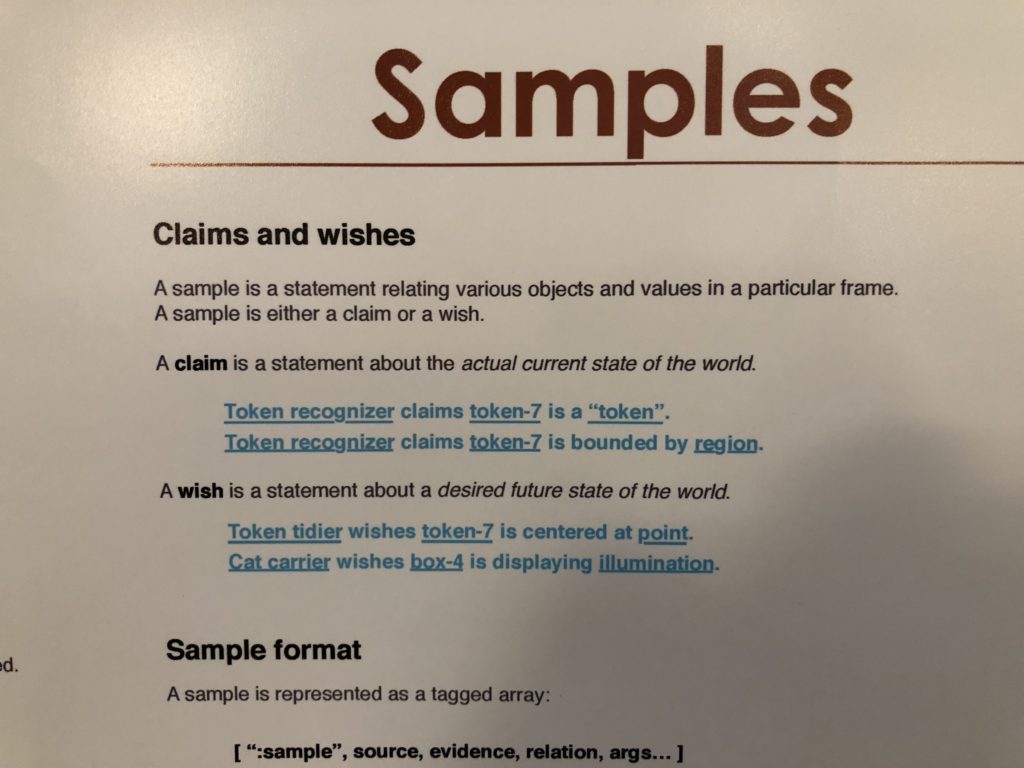

The Realtalk language sounds interesting. I thought someone mentioned that it is based on Lua but I could be wrong. Some code examples highlight a very interesting property of the language, wishes and claims. This suggests that a running program cannot just assert or command, but rather has to get consensus from other modules before things can happen.

Claims and wishes in Realtalk

Reference manuals for Realtalk and Dynamicland are distributed throughout the space, in various 3 ring binders and notebooks. Of course, all the examples are tagged, and come to life. You can even edit the examples directly and see them run immediately.

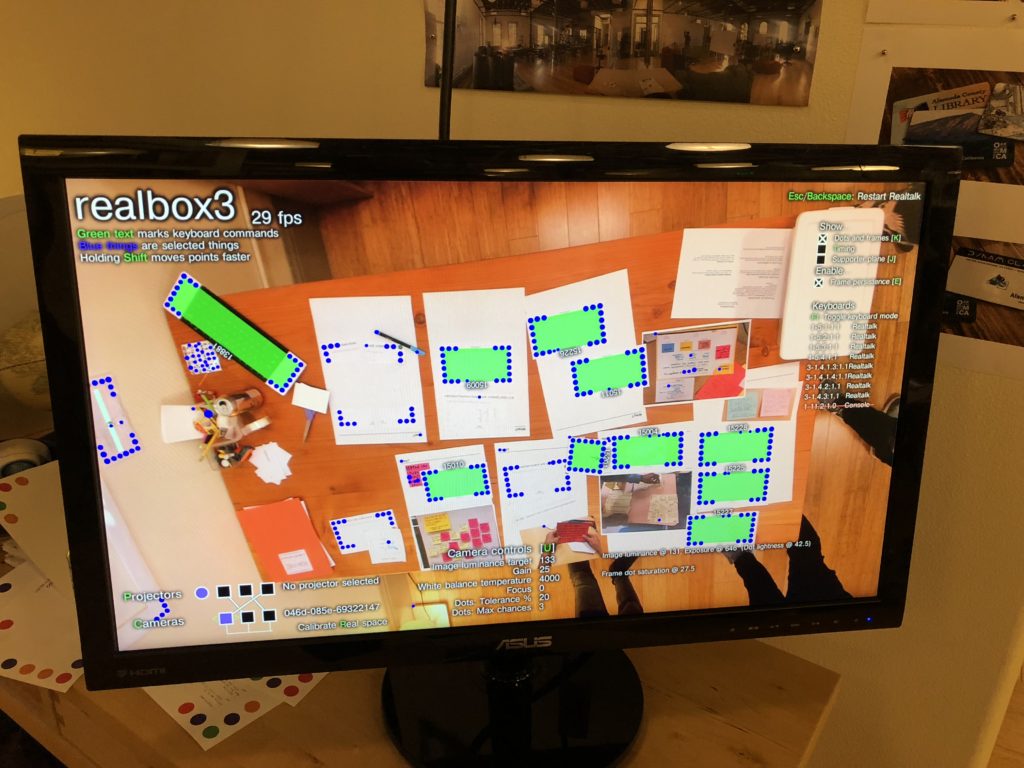

There were several monitors throughout the space that showed a “system’s eye view” of what was going on. While they intended as a debugging display, it was interesting to refer to them for various keyboard shortcuts, and insight into what was going on. However, they are not needed in regular use of the space.

What it means

There is a lot going on with Dynamicland: it is an ambient computing environment, a collaboration tool, a software development system, and an entire software environment. What is unique is that way that it has been pulled together, in a way that allows physical interactions to enable working together.

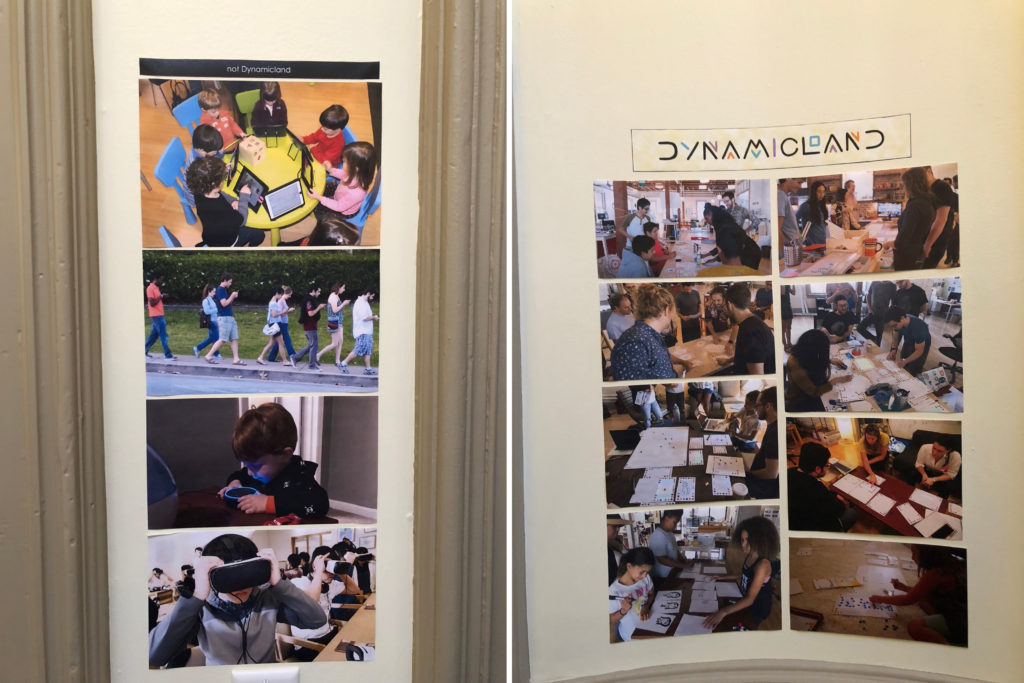

The following pictures show how this could manifest:

With and without Dynamicland

In the first picture, people are together, using technology, but each one is focused on their own screen. Even though they are sharing space, there is a sense of isolation. In Dynamicland, everyone can see what others are working on. It is possible to build things easily by working together.

Currently, the guiding narrative for Dynamicland is that people are working together to build software systems. However, this is different from the way most people might think of programming. The goal is not build a marketable app, or develop a new operating system, etc. Dynamicland programming is about solving a problem in another domain, eg. making a presentation, teaching a class, exploring a data set – and making software much more accessible as a tool for this.

The vision of the future presented here is not just about an ambient computing environment, one that can sense and respond to objects, but also one that exposes programming as a tool that you can apply in the physical environment, in a really transparent way. There is a two-way flow here: bringing code into the physical environment, and applying physical gestures and interactions to coding.

In general, the idea of tying together the digital and physical worlds in this way is very powerful. By the end of the evening I was even starting to get annoyed with objects (eg coats, backpacks, etc) that were NOT visible to the system. It was very convenient to look for something, say a keyboard or a particular code fragment, and have the system tell you where it was (and highlight it where it was).

It is also a very different approach to the “Internet of Things”: rather than embedding computation and sensors into various objects, the sensors and computation are in the environment, and anything can be made “smart” – like pieces of paper, and bits of clay with colored balls. The programming model is maintained by the back-end infrastructure: you still treat every bit of paper and “stuff” as if it is programmable, and you don’t really think about the computers that really do the heavy lifting. I’d like to see how this model can be extended to incorporate devices that do have significant computing built in, such as drones, robots & phones.

However, even smart devices need to work with networked infrastructure, and Dynamicland’s RealtalkOS is breaking new ground into what that could look like. Its programming paradigm accepts “unpredictable” real world input, manages issues of consensus & collaboration, and enables interactions across a wide space.

I’ll be interested to see where this goes!

[…] want to geek out and find out more, pop over to their website, and also check out this extensive review with plenty of video demos written by GP (our Chief Tinkerer in San Francisco – yes more of […]